Hive

Protect your platform with CSAM Detection

Safeguard your platform and users with Hive's enhanced CSAM Detection and child safety solutions to help you identify, remove, and report harmful content more effectively.

Protect your platform with CSAM Detection

Safeguard your platform and users with Hive's enhanced CSAM Detection and child safety solutions to help you identify, remove, and report harmful content more effectively.

Delivering CSAM detection across images, video and text through technology from:

Delivering CSAM detection across images, video and text through technology from:

CSAM Detection API and CSE Text Classifier API bring together Safer by Thorn’s proprietary technology and Hive's enterprise-grade cloud-based APIs

Learn about CSAM Detection API

Streamline submissions of required reports to the CyberTipline with NCMEC reporting integrated into Hive’s Moderation Dashboard

See NCMEC workflow

Introducing CSE Text Classifier APINew

New

Introducing CSE Text Classifier APINew

New

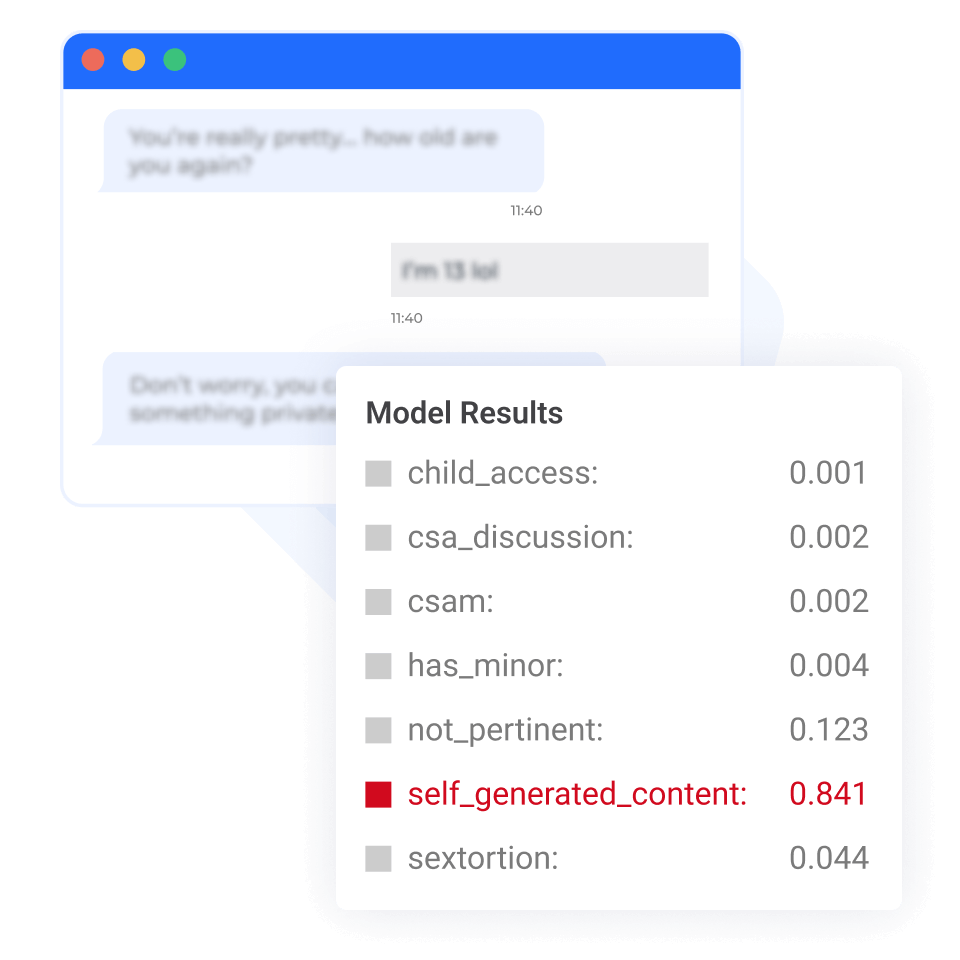

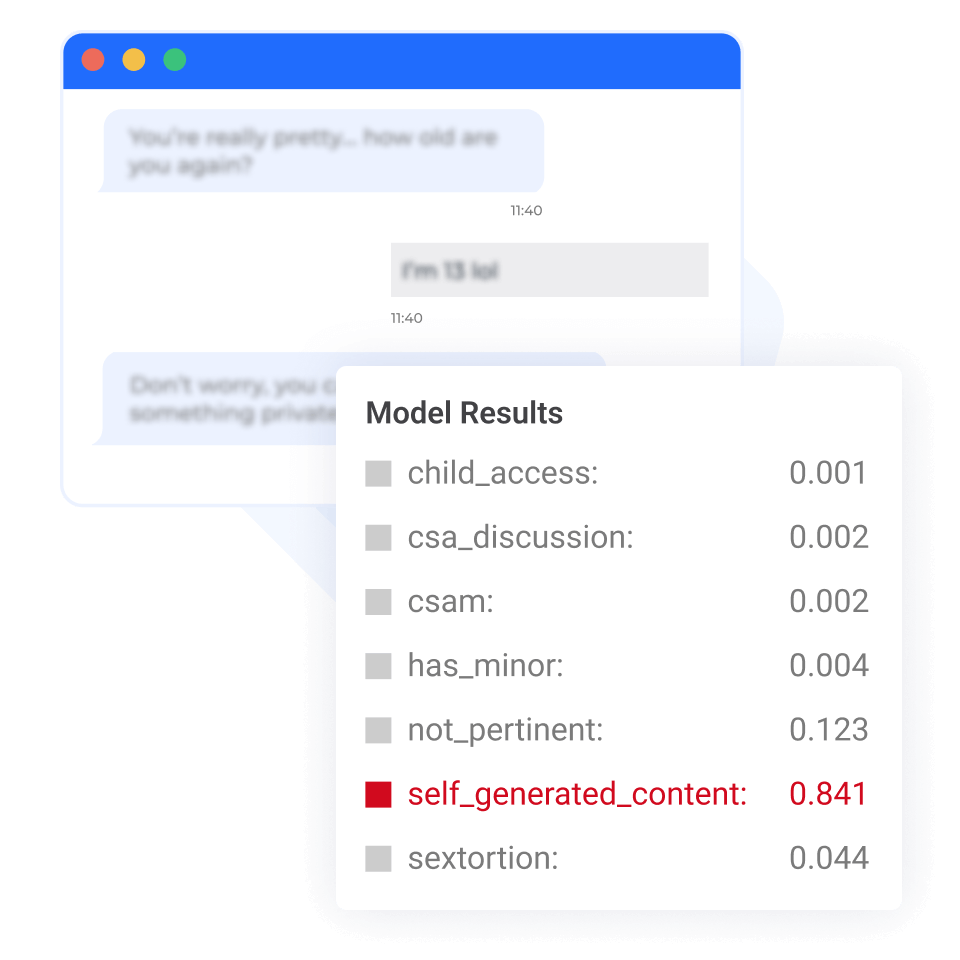

We've expanded Hive's CSAM detection suite to include child sexual exploitation (CSE) text classification, featuring trusted technology by Thorn.

The CSE Text Classifier API empowers platforms to proactively detect potential text-based child sexual exploitation in chats, comments and other UGC text fields.

We've expanded Hive's CSAM detection suite to include child sexual exploitation (CSE) text classification, featuring trusted technology by Thorn.

The CSE Text Classifier API empowers platforms to proactively detect potential text-based child sexual exploitation in chats, comments and other UGC text fields.

Proactively detect CSE in text-based content

Proactively detect CSE in text-based content

Proprietary machine learning classification model (a.k.a. “text classifier”) developed by Thorn

Categorizes content and assigns a risk score across key classes: CSAM, Child access, Sextortion, Self-generated content and CSA discussion

Enterprise-grade processing via Hive's cloud-based APIs and seamlessly integrated into production workflows

Proactively detect known and new CSAM at scale

Proactively detect known and new CSAM at scale

CSAM is a serious risk

CSAM is a serious risk

Platforms with user-generated content face challenges in preventing child sexual abuse material (CSAM). Failing to address CSAM risks can seriously impact a platform's stability and viability.

Protect your platform

Protect your platform

Built by experts in child safety technology, Safer is a comprehensive solution that detects both known and new CSAM for every platform with an upload button or messaging capabilities.

Industry-leading CSAM Detection by Thorn

Harness industry-leading CSAM detection developed by Thorn, a trusted leader in the fight against online child sexual abuse and exploitation.

Seamless integration

through Hive

Process high volumes with ease with Hive’s real-time API responses. Model responses are accessible with a single API call for each product.

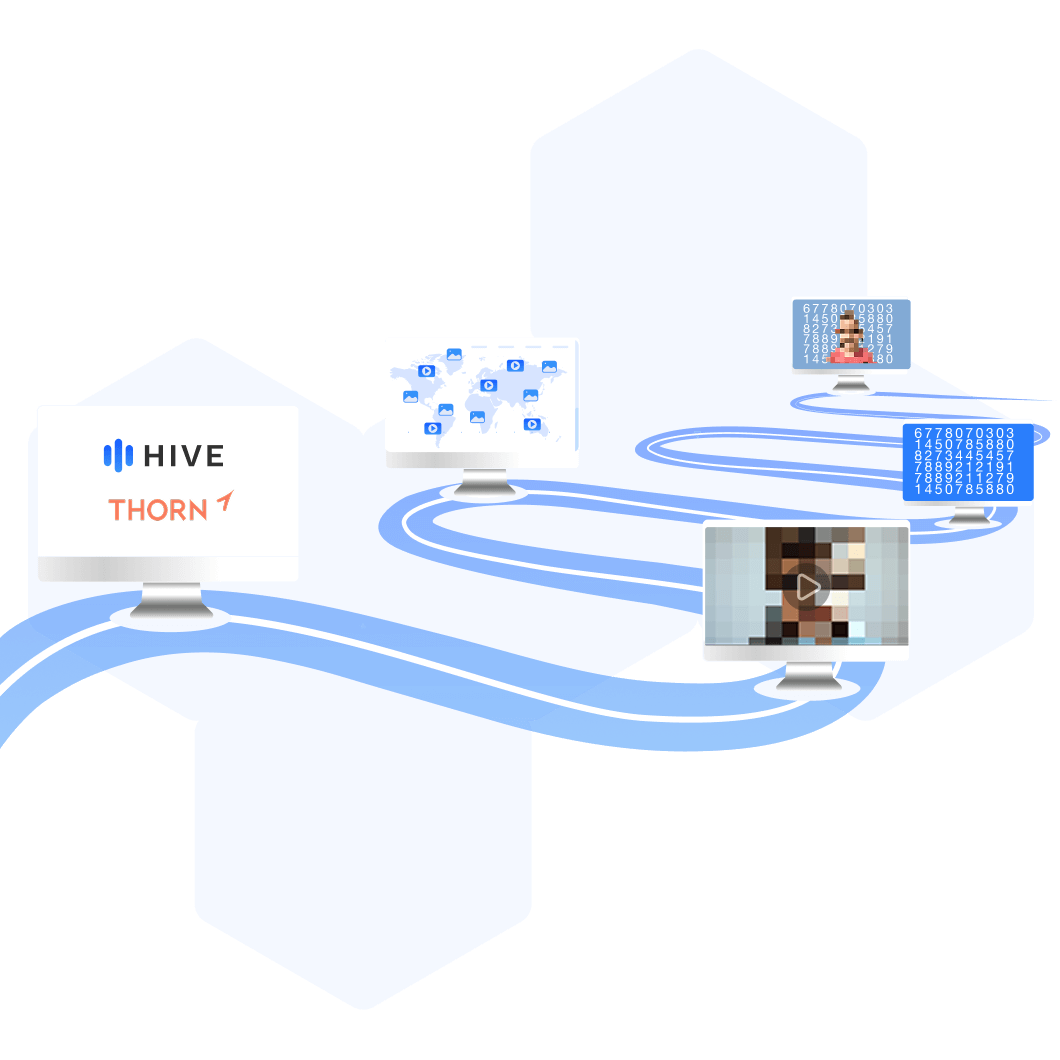

How CSAM Detection works

How CSAM Detection works

Hive's CSAM Detection suite unifies hash matching, best-in-class AI classification, and integrated reporting to help you ensure that user-generated content is safe.

Hive's CSAM Detection suite unifies hash matching, best-in-class AI classification, and integrated reporting to help you ensure that user-generated content is safe.

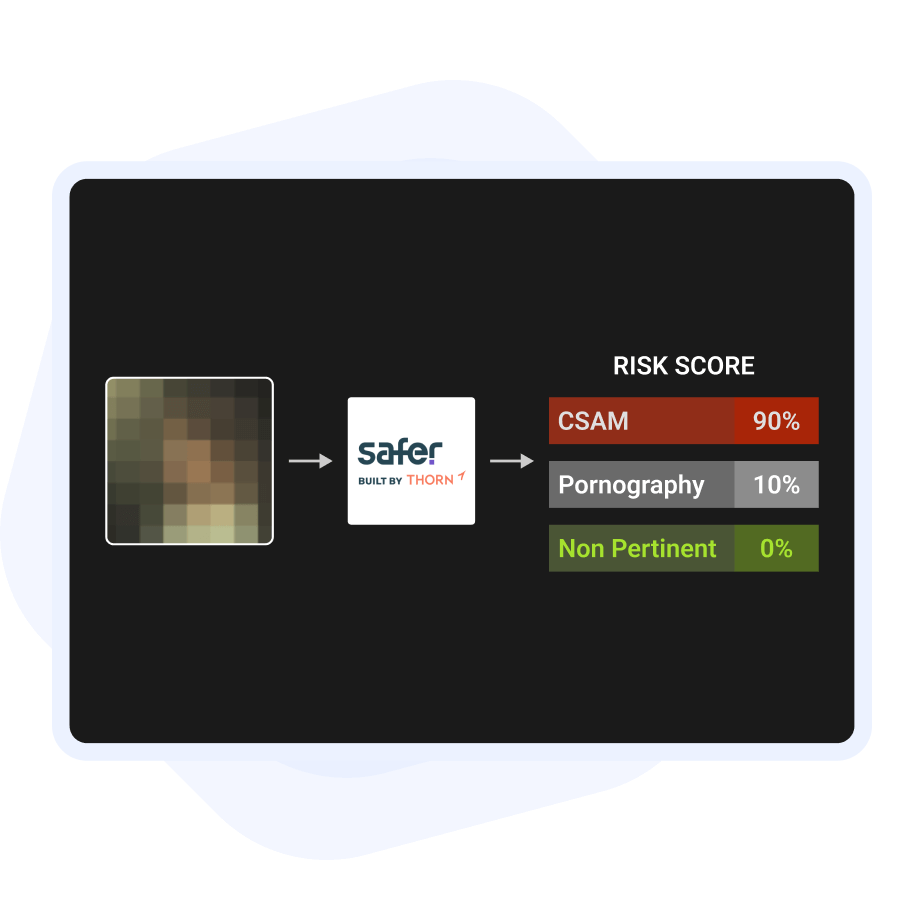

Detect new CSAM with AI Image and Video Classifier

Detect new CSAM with AI Image and Video Classifier

Utilizes state-of-the-art machine learning models

Classifies content into three categories: CSAM, pornography, and benign

Generates risk scores for faster human decision-making

Trained in part using trusted data from NCMEC CyberTipline, served exclusively on Hive's APIs

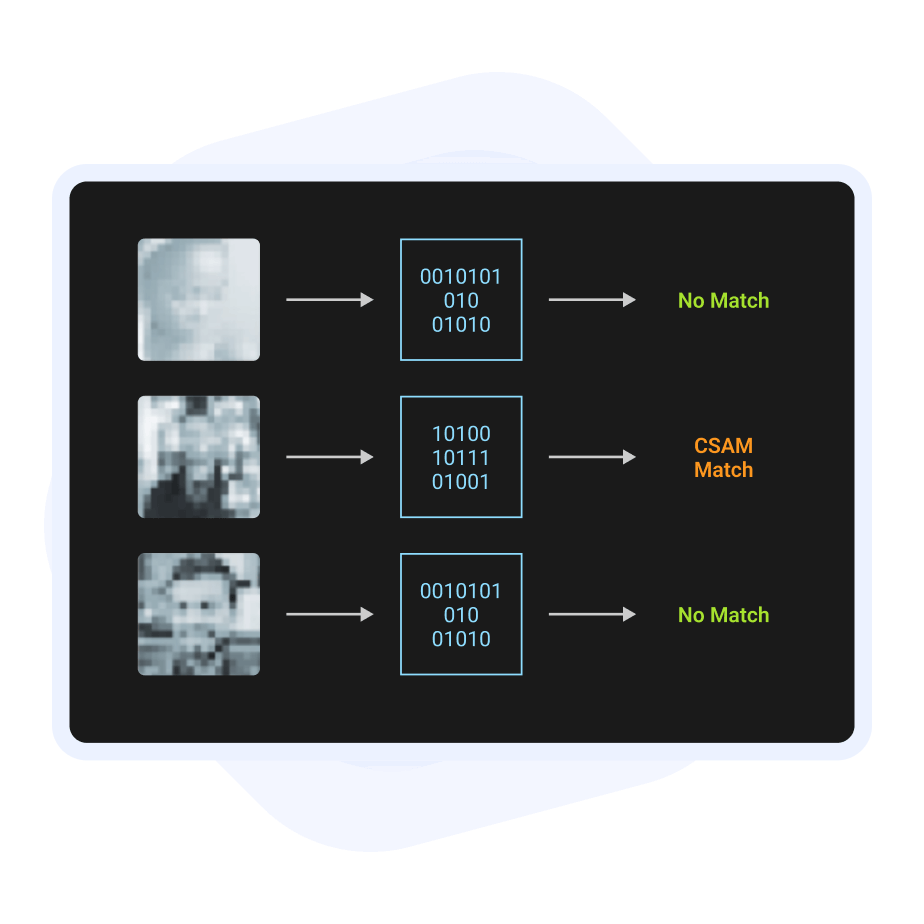

Identify known CSAM with Hash Matching

Identify known CSAM with Hash Matching

Securely matches content against trusted CSAM database of +57 million hashes for wide-ranging detection

Detects manipulated images using Thorn’s proprietary perceptual hashing technology

Implements proprietary scene-sensitive video hashing (SSVH) to identify known CSAM within video content

IWF members can also access IWF’s image and video hashes of CSAM content through our CSAM Detection API.

Detect text-based exploitation with AI Text Classifier

Detect text-based exploitation with AI Text Classifier

Proactively combat potential text-based child sexual exploitation at scale, in conversations, comments, and messages

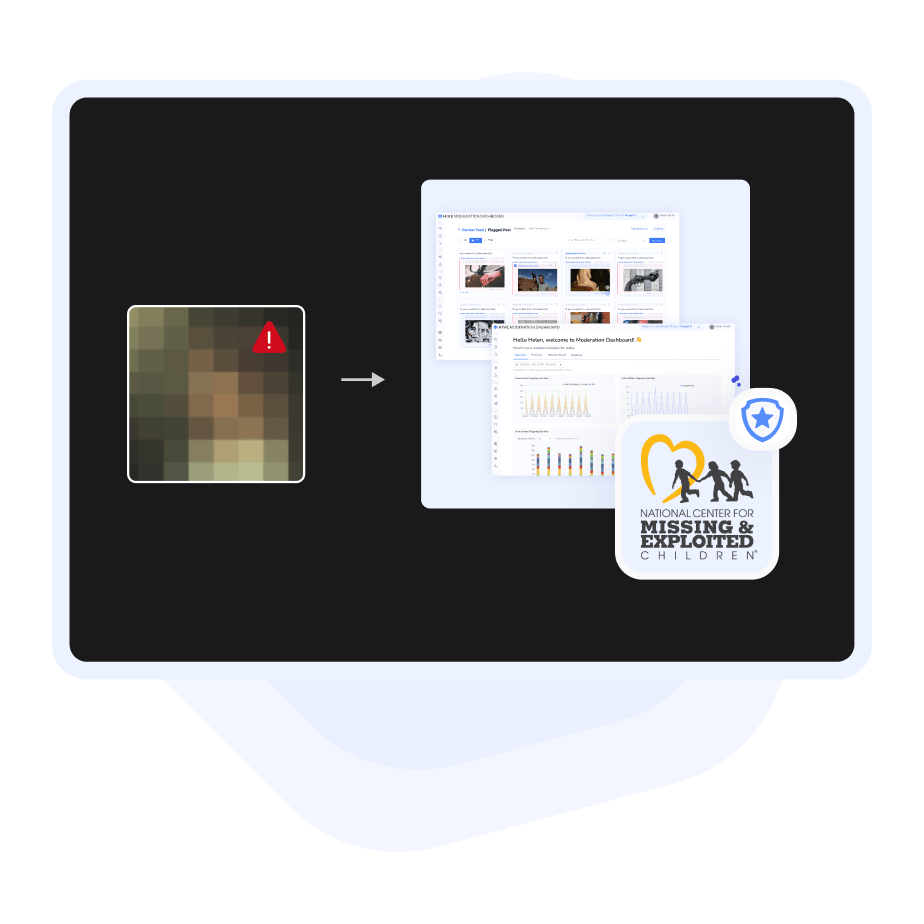

Seamless reporting with Moderation Dashboard

Seamless reporting with Moderation Dashboard

Streamline submissions of required reports to the CyberTipline — saving you time while ensuring your platform meets relevant legal obligations for CSAM reporting

Streamline CSAM Reports with our NCMEC Integration

Why choose Hive

Why choose Hive

Protect your platform from both known and emerging CSAM threats with a single, scalable solution — from advanced detection to integrated moderation workflows.

Protect your platform from both known and emerging CSAM threats with a single, scalable solution — from advanced detection to integrated moderation workflows.

Comprehensive CSAM coverage

Access comprehensive image and video CSAM and CSE text detection through a single API endpoint for each solution.

Text classification available

Detect harmful text content with Hive's new CSE Text Classifier API powered by Thorn, giving your team even more coverage across formats.

Speed at scale

Hive handles billions of pieces of content each month with high performance — so protecting your users never slows you down.

Seamless integration

For CSAM Detection API, hash matches and model responses are accessible with a single API call. Integrate Safer by Thorn into any application with just a few lines of code.

Proactive updates

Thorn maintains a database of 57M+ known CSAM hashes and receives regular NCMEC updates. IWF Members can access IWF's hashes.

Integrated Moderation Dashboard

Manage, review, and escalate flagged content to NCMEC from one interface.

Learn More

Comprehensive CSAM coverage

Access comprehensive image and video CSAM and CSE text detection through a single API endpoint for each solution.

Text classification available

Detect harmful text content with Hive's new CSE Text Classifier API powered by Thorn, giving your team even more coverage across formats.

Speed at scale

Hive handles billions of pieces of content each month with high performance — so protecting your users never slows you down.

Seamless integration

For CSAM Detection API, hash matches and model responses are accessible with a single API call. Integrate Safer by Thorn into any application with just a few lines of code.

Proactive updates

Thorn maintains a database of 57M+ known CSAM hashes and receives regular NCMEC updates. IWF Members can access IWF's hashes.

Integrated Moderation Dashboard

Manage, review, and escalate flagged content to NCMEC from one interface.

Learn More

Developer-friendly integration

Developer-friendly integration

Connect in minutes, not months.

Our API is designed for hassle-free integration, with easy-to-use endpoints that let you submit images or entire videos and retrieve structured results.

Connect in minutes, not months.

Our API is designed for hassle-free integration, with easy-to-use endpoints that let you submit images or entire videos and retrieve structured results.

Why developers love Hive APIs

Why developers love Hive APIs

Simple, RESTful endpoints with fast, predictable responses.

Production-ready JSON that contains easily parseable labels and scores.

Developer docs with code samples, libraries, and quick start guides.